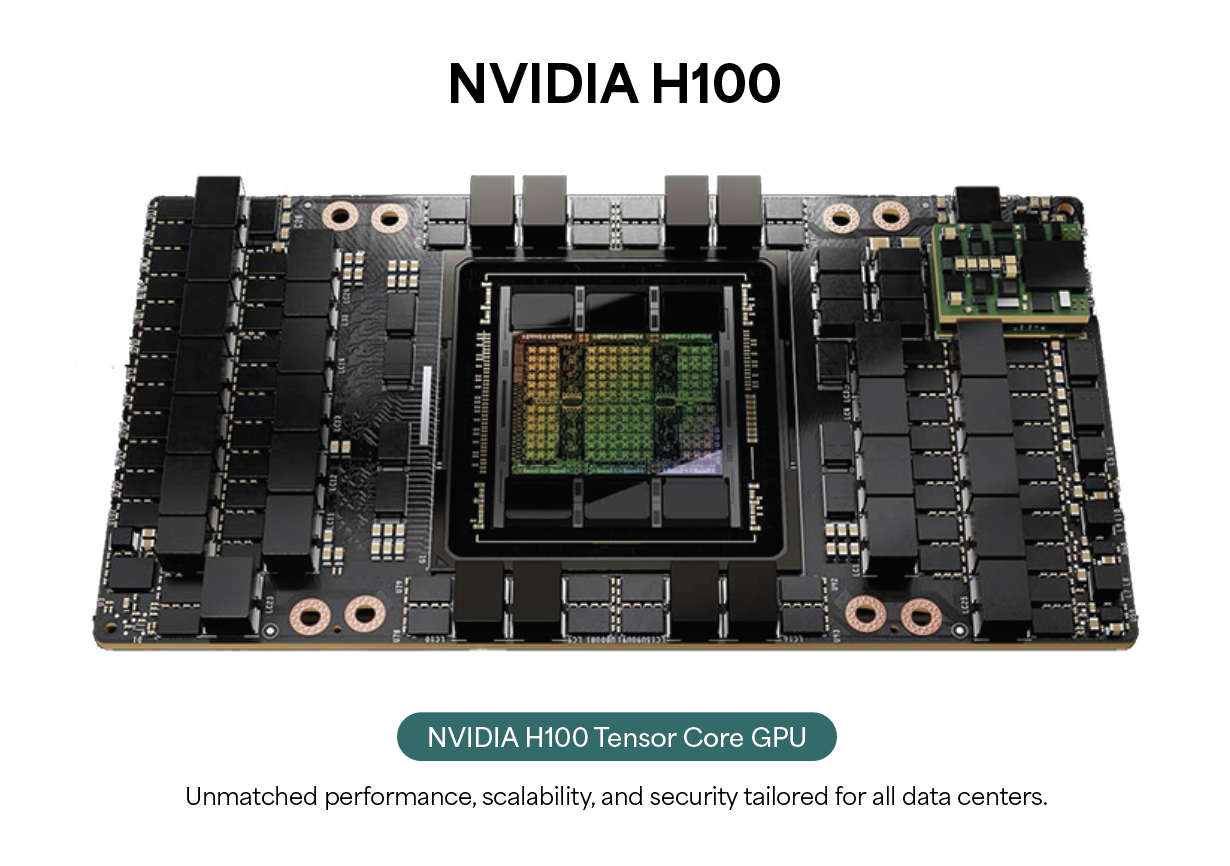

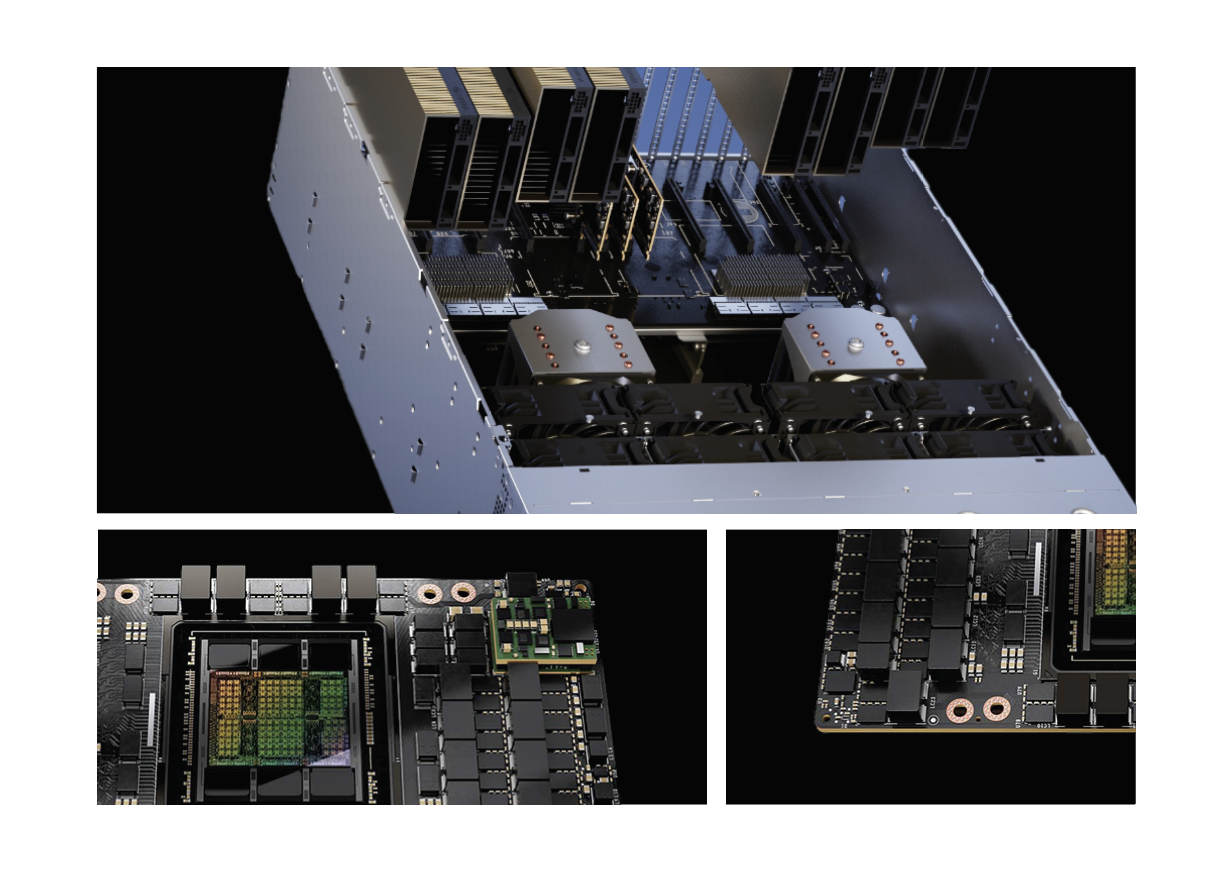

Access unparalleled performance, scalability, and security for any workload using the NVIDIA® H100 Tensor Core GPU. Harnessing the power of the NVIDIA NVLink® Switch System, you can connect up to 256 H100 GPUs to accelerate exascale workloads. The GPU has a dedicated Transformer Engine designed to tackle trillion-parameter language models. Through a synergy of technological advancements, the H100 can accelerate large language models (LLMs) by an astounding 30X compared to the previous generation, establishing a new standard for industry-leading conversational AI.

Elevate the performance of Large Language Model inference with a powerful boost.

The PCIe-based H100 NVL with NVLink bridge is tailored for Large Language Models (LLMs) with up to 175 billion parameters. Leveraging the Transformer Engine, NVLink technology, and an impressive 188GB HBM3 memory ensures optimal performance and seamless scalability across diverse data centers, making LLMs accessible to the mainstream. Servers equipped with H100 NVL GPUs exhibit a remarkable 12X increase in performance for the GPT-175B model compared to NVIDIA DGX™ A100 systems, all while maintaining low latency in power-constrained data center environments.

Enterprises are embracing AI at a mainstream level, requiring comprehensive, AI-ready infrastructure to propel them into this evolving era.

NVIDIA H100 GPUs designed for mainstream servers offer a five-year subscription encompassing enterprise support and access to the NVIDIA AI Enterprise software suite. This package streamlines the adoption of AI by delivering top-notch performance. It ensures organizations have the necessary AI frameworks and tools to construct H100-accelerated AI workflows, including applications like AI chatbots, recommendation engines, vision AI, and more.

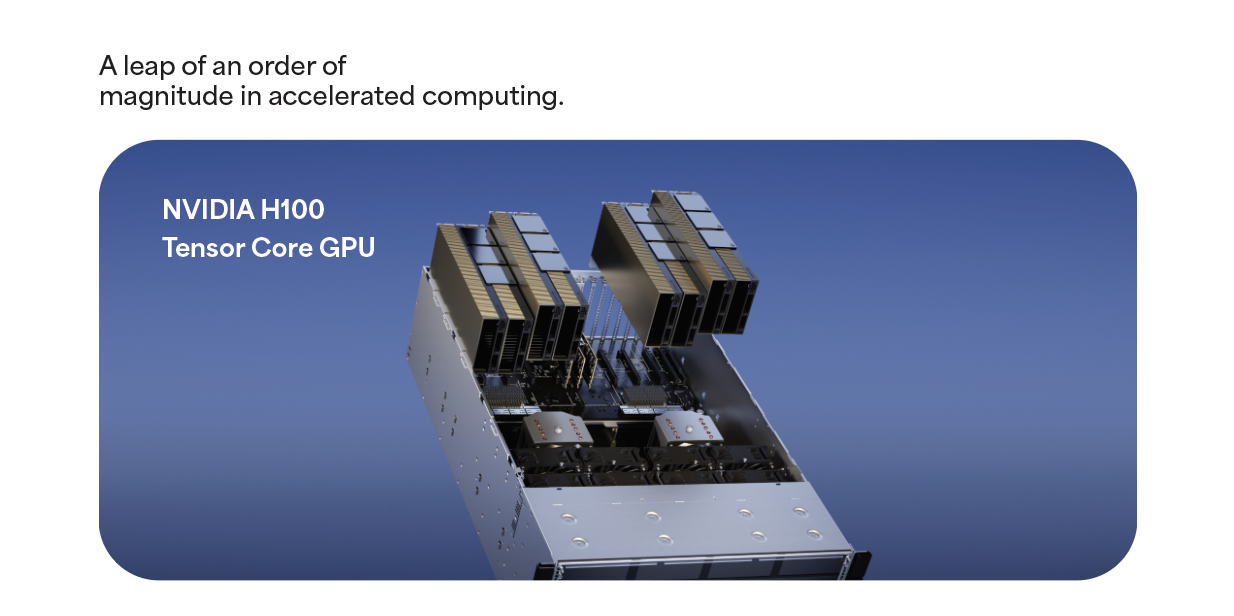

Transformational AI Training

The H100 boasts fourth-generation Tensor Cores and a Transformer Engine with FP8 precision, enabling up to 4X faster training than the previous generation for GPT-3 (175B) models. Implementing H100 GPUs on a data center scale ensures exceptional performance and makes the next generation of exascale high-performance computing (HPC) and trillion-parameter AI accessible to all researchers.

Real-Time Deep Learning Inference

The H100 extends NVIDIA’s dominant position in the market for inference with multiple innovations, achieving a remarkable acceleration of up to 30X and delivering unparalleled low latency. Fourth-generation Tensor Cores accelerates all precisions—FP64, TF32, FP32, FP16, INT8, and the latest addition, FP8. This broad range of precision options reduces memory usage and enhances performance, all while maintaining accuracy for Large Language Models (LLMs).

Exascale High-Performance Computing

The NVIDIA data center platform consistently surpasses the performance gains predicted by Moore’s Law. The H100 introduces groundbreaking AI capabilities, enhancing the synergy of High-Performance Computing (HPC) and AI (HPC+AI). This power amplification accelerates the time to discovery for scientists and researchers engaged in addressing the world’s most critical challenges.

Accelerated Data Analytics

Servers with H100 deliver substantial compute power, boasting 3 terabytes per second (TB/s) of memory bandwidth per GPU. This, combined with scalability facilitated by NVLink and NVSwitch™, empowers these servers to effectively address data analytics with high performance and scalability, particularly for managing massive datasets. The integration of NVIDIA Quantum-2 InfiniBand, Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS™ establishes the NVIDIA data center platform as uniquely positioned to accelerate substantial workloads with unmatched levels of performance and efficiency.

Enterprise-Ready Utilization

The H100 incorporates second-generation Multi-Instance GPU (MIG) technology, optimizing GPU utilization by securely partitioning it into seven distinct instances. With confidential computing support, the H100 enables secure, end-to-end, multi-tenant usage, establishing it as an ideal solution for environments such as cloud service providers (CSP).Lorem ipsum dolor amet, consectetur adipiscing elit, sed do eiusmod tempor.

Built-In Confidential Computing

In contrast to traditional CPU-based confidential computing solutions, which may be restrictive for compute-intensive workloads such as AI and HPC, NVIDIA introduces Confidential Computing as a built-in security feature within the NVIDIA Hopper™ architecture. This groundbreaking feature positions the H100 as the world’s first accelerator with confidential computing capabilities. Users can now safeguard the confidentiality and integrity of their data and applications while harnessing the unparalleled acceleration provided by H100 GPUs.

Unmatched performance for large-scale AI and high-performance computing (HPC).

The Hopper Tensor Core GPU is set to drive the NVIDIA Grace Hopper CPU+GPU architecture, meticulously crafted for terabyte-scale accelerated computing, delivering a remarkable 10X improvement in performance for large-model AI and high-performance computing (HPC). The NVIDIA Grace CPU, leveraging the flexibility of the Arm® architecture, forms a CPU and server architecture expressly designed for accelerated computing. The Hopper GPU is seamlessly paired with the Grace CPU through NVIDIA’s ultra-fast chip-to-chip interconnect, providing an exceptional bandwidth of 900GB/s, a notable 7X faster than PCIe Gen5. This innovative design is poised to deliver up to 30X higher aggregate system memory bandwidth to the GPU than the fastest servers available today, translating to up to 10X higher performance for applications managing terabytes of data.